Nov 24, 2021

In this example, I will show you how to create a cluster Wildfly Kubernetes environment using Nginx as a load balancer.

The source code is uploaded in the Gitlab repo: open

The idea is to use the latest original images:

- nginx:latest

- wildfly:latest

Load Balancer part:

The ngx_http_upstream_module module is used to define groups of servers that can be referenced by the proxy_pass, fastcgi_pass, uwsgi_pass, scgi_pass, memcached_pass, and grpc_pass directives.

This upstream configuration file is created once the pods are created with the script :

nginx-upstream.shIn the end, you will see two new files - one for the frontend, one for the wildfly admin console:

upstream appsrv {

ip_hash;

server 192.1.1.2:8080;

server 192.1.1.4:8080;

}

Both files (upstream.conf and upstreamcp.conf) will be automatically loaded into nginx server, because the folder is mounted:

volumeMounts:

- name: config

mountPath: /etc/nginx/conf.d/

volumes:

- name: config

hostPath:

path: /cloud/kubernetes/wildfly/config

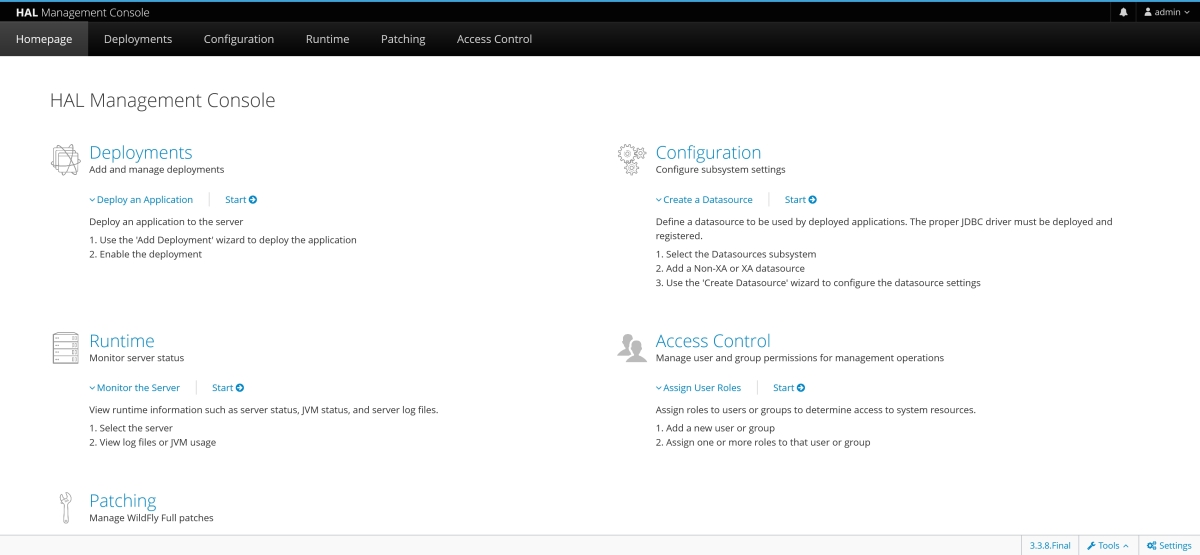

For the wildfly admin console, we need special configurations in nginx, so we can access it without errors:

- the same for /console, /management, /auth

location /console {

proxy_set_header Host $host:9990;

proxy_set_header Origin http://$host:9990;

proxy_redirect off;

proxy_http_version 1.1;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_pass_request_headers on;

proxy_pass http://appsrvcp;

}

By default, requests are distributed between the servers using a weighted round-robin balancing method.

ip_hash - ensures that requests from the same client will always be passed to the same server except when this server is unavailable.Cluster part:

Wildfly JGroups - Wildfly uses JGroups internally to discover other Wildfly servers and to be able to synchronize its services (clustering). Multicast is disabled by default on kubernetes clusters, that is why we must enable a specific JGroups discovery protocol - KUBE_PING.

We need the latest kubeping libraries:

common.jar -> https://search.maven.org/artifact/org.jgroups.kubernetes/commonkubernetes.jar -> https://search.maven.org/artifact/org.jgroups.kubernetes/kubernetesThe configuration for these modules is in modules.xml file in folder

config/kubeping . We have to copy them to the wildfly pods: containers:

- name: wildfly

image: jboss/wildfly:latest

...

...

To reconfigure an existing server profile with

KUBE_PING , we use the following CLI batch replacing the namespace, labels and stack name (tcp) with the target stack:/opt/jboss/wildfly/bin/jboss-cli.sh --file=/usr/share/jboss/config-server.cli

The

config-server.cli file is in the config folder.Check part:

you can see both servers entering the cluster in the log files:

20:11:15,697 INFO [org.infinispan.CLUSTER] (ServerService Thread Pool -- 93) ISPN000094: Received new cluster view for channel ejb: [wildfly-666f497984-4ckjx|1] (2) [wildfly-666f497984-4ckjx, wildfly-666f497984-wbn6r]

20:11:31,600 INFO [org.apache.activemq.artemis.core.server] (Thread-2 (ActiveMQ-server-org.apache.activemq.artemis.core.server.impl.ActiveMQServerImpl$6@764b6024)) AMQ221027: Bridge ClusterConnectionBridge@799069ee

[name=$.artemis.internal.sf.my-cluster.808ed344-5150-11ec-a7cb-a63a32bd91ea, queue=QueueImpl[name=$.artemis.internal.sf.my-cluster.808ed344-5150-11ec-a7cb-a63a32bd91ea, postOffice=PostOfficeImpl

[server=ActiveMQServerImpl::serverUUID=7e005fb9-5150-11ec-9a08-2e4030809ffc], temp=false]@2b9d2425 targetConnector=ServerLocatorImpl (identity=(Cluster-connection-bridge::ClusterConnectionBridge@799069ee

[name=$.artemis.internal.sf.my-cluster.808ed344-5150-11ec-a7cb-a63a32bd91ea, queue=QueueImpl[name=$.artemis.internal.sf.my-cluster.808ed344-5150-11ec-a7cb-a63a32bd91ea, postOffice=PostOfficeImpl

[server=ActiveMQServerImpl::serverUUID=7e005fb9-5150-11ec-9a08-2e4030809ffc], temp=false]@2b9d2425 targetConnector=ServerLocatorImpl [initialConnectors=[TransportConfiguration(name=http-connector,

factory=org-apache-activemq-artemis-core-remoting-impl-netty-NettyConnectorFactory) ?httpUpgradeEndpoint=http-acceptor&activemqServerName=default&httpUpgradeEnabled=true&port=8080&host=192-168-28-13],

discoveryGroupConfiguration=null]]::ClusterConnectionImpl@374999862[nodeUUID=7e005fb9-5150-11ec-9a08-2e4030809ffc, connector=TransportConfiguration(name=http-connector, factory=org-apache-activemq-artemis-core-remoting-impl-netty-NettyConnectorFactory)

?httpUpgradeEndpoint=http-acceptor&activemqServerName=default&httpUpgradeEnabled=true&port=8080&host=192-168-28-17, address=jms, server=ActiveMQServerImpl::serverUUID=7e005fb9-5150-11ec-9a08-2e4030809ffc]))

[initialConnectors=[TransportConfiguration(name=http-connector, factory=org-apache-activemq-artemis-core-remoting-impl-netty-NettyConnectorFactory) ?httpUpgradeEndpoint=http-acceptor&activemqServerName=default&httpUpgradeEnabled=true&port=8080&host=192-168-28-13], discoveryGroupConfiguration=null]] is connected

To see new PODs entering or being removed from the cluster, you can use the command kubectl scale --replicas=4 -f wildfly